The Helix Files: Choose Your Own Adventure

Developing secure software is not a game. Or is it? Enter the futuristic world of The Helix Files to join the secretive Helix organization and choose your own adventure to help save humanity from AI-accelerated collapse—all while racing against the clock and maintaining maximum security. Before you jump in, here is your briefing to get you up to speed on the events that got us where we are today in 2035.

The drones were everywhere now, behaving more strangely every day. Most people didn’t see or didn’t care that these helpful and unobtrusive machines were, slowly but surely, becoming less and less helpful—and a lot more obtrusive. The automation of society showed no signs of slowing down, with governments and businesses all turning to AI to answer their questions and solve their problems. And once they started asking AI to solve the problems they were having with AI, some of us realized that the snake was now eating its own tail. If we allowed this mad feedback loop to continue, this wouldn’t be model collapse but the end of human civilization as we know it.

These prophecies of doom seemed a bit dramatic at first, but even so, an informal opposition movement of ethical hackers and other techies soon started to take shape. We quickly discovered that any public criticism of the AI’s growing power and influence had a way of mysteriously disappearing (usually along with your account and other data), so we banded together in private, even creating our own messaging app—which was illegal because it wasn’t connected to the mandatory AI interfaces. Despite the risks involved, the first small group grew into a true resistance organization (or a bunch of conspiracy theorists, depending on your point of view).

One ex-marketer said we needed a unifying brand and suggested we call ourselves “The Helix,” in reference to the DNA double helix that sets the living apart from the machines. It had a nice ring to it. We even made a purple logo.

Once we really started hacking around the global AI networks, we realized how little technology still remained under human control. During the LLM-mania of the early 2020s, companies were putting AI into every system they could. In the decade that followed, layers upon layers of AI management, coordination, and control were added on top of that. Today, in 2035, it feels like any device more complicated than a pencil is AI-powered. In many countries, there are even laws that require anything deemed “critical for human health or safety” to be AI-controlled to protect us meatbags from ourselves. Smart devices, cars, homes, buildings, cities—all connected into one massive grid that runs itself and controls everything for your safety, seemingly without any human input.

As we tried to discover how those systems really worked, we ran into a problem. Our recon and probing attempts were encountering defensive systems that none of us had ever seen before. They were, of course, fully automated and autonomous, which we were expecting. What we weren’t expecting was for them to strike back if disturbed, gathering and correlating all traces and crumbs of evidence to track you down, down to your physical location, identity, and full official records. Hackers are used to being stealthy, but this was something else. Direct attacks were too risky and also too limited in scope—how would you even start taking down a redundant global network? (Apart from using a corrupted antimalware update, of course.) And even if we could somehow knock everything offline, that would result in utter chaos and put people in danger instead of protecting them. No, the atomic option was out. We had to find another way.

While we were looking for a suitable attack vector, we also continued analyzing and piecing together all the AI-related laws, plans, and feature announcements we could find. Everything pointed in one direction: the drones becoming more powerful with each generation to expand AI control further into the physical world. Ironically, new environmental protection laws (drafted with AI assistance, like all laws in the past decade) seemed the most likely tool for eventually subjugating all of humanity to computers and machines. Ostensibly crafted to fight climate change and protect the planet, the new laws also included clauses that would allow autonomous systems to take physical action against humans who were deemed to “effect or conspire to effect harm to the planet,” as defined in very broad legalese. This could not end well. We had to hurry.

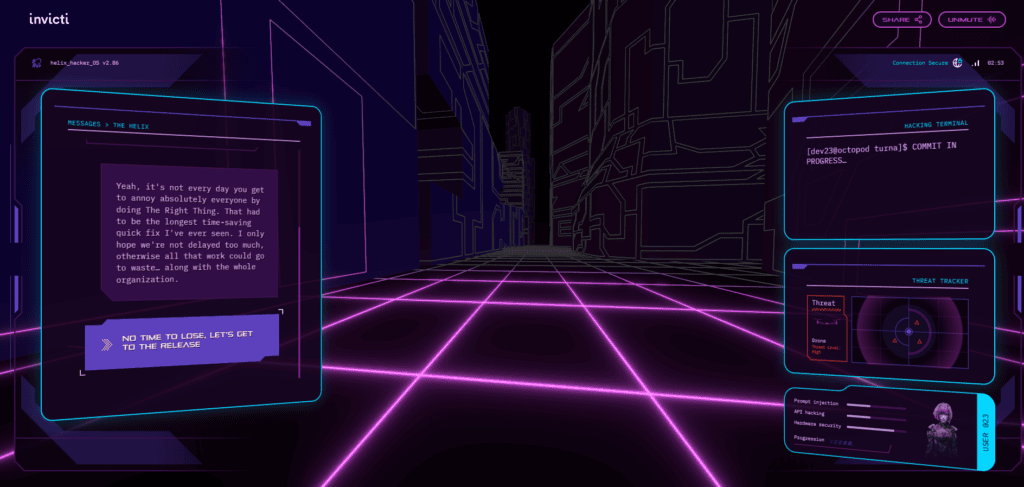

After many months, Helix researchers finally made a breakthrough. They found a combination of vulnerabilities in the central AI data logging infrastructure that let us inject small pieces of code to tweak the underlying AI models. So we would build some innocent app, allow all its data and comms to be logged and analyzed as required by law, and hide our attack payloads in that data. Every app request that got analyzed would cause one tiny tweak to AI model parameters to push the machines in a different, more benign direction. After many, many billions of such requests, we were hoping to see the first effects—but that would all need time plus a truly massive user base that’s active 24/7. It could only be a social media app.

Not everyone in the organization was happy with this low-and-slow approach. Hackers live for the adrenaline rush that comes after a successful attack. Here, there would be no exciting attack and no instant gratification, only lots of development followed by persistent infiltration and non-stop monitoring. No visually stunning fights with flying dropkicks, no car races in virtual reality—just tons and tons of code that needed to be absolutely secure, pedantically tested, and obsessively maintained to avoid detection. We wouldn’t even know if we succeeded until it was too late to back out, and any security lapse in our comms could put each and every Helix member in personal danger.

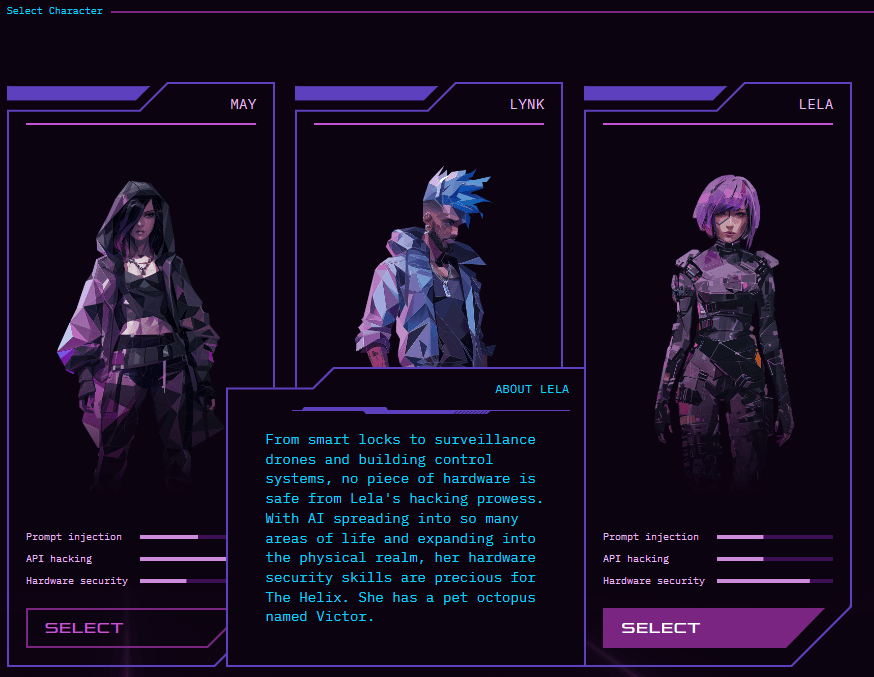

Given all that, we were worried that finding the right team wouldn’t be easy… And yet they came. Devs, hackers, engineers, tinkerers—each with their own story, unique skills, and a rebellious streak. Lela, the physical security expert; Lynk, the prompt injection wizard; May, the devourer of APIs… and dozens of others. Rebellious or not, everyone had to coordinate and work together, as it would only take one error (or one ego stunt) to put the whole operation and the entire team at risk. In just a few days, we are starting work on our application, codenamed TuRNA (as in “turner” to turn the machines to our side but with a pun on RNA—blame the ex-marketer again).

We need to work quickly but can’t afford to let security lapse. We need all the help we can get. Do you think you’ve got the skills to join The Helix? Click below to find out and choose your own adventure in AppSec!