Sensitive data exposure in public web assets: A hidden threat

How often do websites publicly expose sensitive data? Invicti security researcher Kadir Arslan decided to write a custom security check and see what he could find across the world’s 10,000 most popular sites – and what he found were hundreds of exposed secrets.

It would be hard to find a web application nowadays that doesn’t use third-party services and APIs. Most of these require some kind of access key, and that means lots of secret (or at least sensitive) credentials being stored and exchanged. What if someone made a mistake and stored sensitive data directly in the source of a web page? Armed with a passive custom security check in Invicti, we decided to see if could find any cases of sensitive data exposure in popular websites.

What is sensitive data?

The definition of sensitive data depends on the type and owner of this data. In general, sensitive data means any information that a person, company, or institution does not want to expose publicly to avoid the risk of misuse by malicious actors. For people, this would usually be personally identifiable information (PII), while for companies, it could be proprietary corporate information. In the realm of web security, sensitive data includes secrets such as login credentials, access tokens, and API keys.

For the purpose of our research, we decided to define sensitive data as any sufficiently unique string that is used to access web resources. Examples of such sensitive strings include:

- Access tokens

- API keys

- Connection strings

In this case, we can use the term sensitive data because such keys need to be secret and private to prevent security issues. For example, exposing an API token may allow attackers to bypass access controls or at least make access easier.

How to scan for sensitive data in public web assets

We already know that developers occasionally hard-code passwords and other credentials into web pages or even leave them in comments, so I had the idea of using Invicti to write a custom security check that would find the most common API keys and similar tokens on popular websites. We decided to check the Alexa top 10,000 sites and also examine some data scraped from Pastebin as the most popular public paste site.

Invicti’s custom security checks feature allows you to extend the built-in vulnerability detection capabilities with custom scripts. You can write active or passive scripts that specify attack patterns, analyze HTTP responses, and report potential vulnerabilities when the right criteria are met. Because we are analyzing third-party sites, we used only passive checks that do not issue any extra HTTP requests during scans (unlike active checks). For each HTTP request sent by the crawler, you can write a passive security check script to analyze the response. If your script determines that the response contains sensitive information, you can raise a vulnerability in the scanner.

We will go through the process of creating a custom script later on, but you can visit the Invicti Security GitHub repository to get the full script for identifying sensitive data exposure used for this research, complete with known patterns for sensitive data. The custom script was used to crawl the Alexa top 10,000 sites and approximately 1 million Pastebin URLs and search the responses for sensitive data. Pastebin imposes a rate limit that would prevent us from scanning quickly, so we used historical data scraped from Pastebin via archive.org and hosted it in our own test environments for analysis.

Defining signatures and regular expressions for sensitive data

To find sensitive data with our security check, we first need to decide and define what constitutes sensitive data. This requires two things: the right regular expression (regex) to match a specific type of data and a way to measure the randomness (entropy) of identified strings. The entropy check is important as an indicator of unique identifiers. If a string matches a known access key format and looks like it was generated randomly (has enough entropy), we can be practically certain that it is sensitive data, and we can raise a vulnerability.

For example, the following rules can be applied to detect a leaked Amazon AWS access key ID:

- Start with a four-character block:

AKIA,AGPA,AROA,AIPA,ANPA,ANVA,ASIA, orA3Tplus one other character (A–Z, 0–9) - Continue with 16 random characters

With this information, we can create a regular expression as follows:

(A3T[A-Z0-9]|AKIA|AGPA|AROA|AIPA|ANPA|ANVA|ASIA)[A-Z0-9]{16}

Next, we need to check the randomness of each value matched with this regex. In this research, we used Shannon entropy as a measure of randomness (see the code listing below for the exact algorithm). To determine a reliable threshold value for entropy, we ran some tests on different random and non-random strings. The entropy testing included real samples of sensitive data as well as some strings that we created ourselves. The results were as follows:

Sensitive data stringEntropy valueAKIA6GF5VPDHNC7Q****~4.08a414d04b125cfbcc22b9c97b0428****~3.57glpat-se2-ZwN_AdAd4rF4****~4.39AIzaSyD8kljjH-39qYr6KMuMs_gt7aQStuG****~4.92TEST123~2.52ABCEXAMPLE~2.92ABAEXABPLW~2.64

As you can see from the data, real secrets all had an entropy greater than 3, while non-random strings were below 3. Considering both the level of randomness and the length of potentially sensitive strings, we decided that 3 would be a good entropy threshold to eliminate false positive matches.

With this regex and entropy threshold, we have a simple and reliable way to detect strings that are definitely sensitive data, such as the real AWS keys (masked for privacy) of AKIAISCW7NXHB4RL****, AKIA352CUBQERWTX****, AROAU6G0VVT0VXTV****, and AIPAMQNLYYXLUQQU****.

Sensitive data exposed by top websites

Our research found several types of sensitive data publicly exposed on hundreds of websites from the Alexa top 10,000 list. In total, 630 sites revealed at least one type of secret, meaning that 6.3% of the world’s most-visited sites are exposing sensitive data. Before we get into the detailed numbers, let’s have a look at the most common types of exposed data.

Amazon Web Services (AWS) API keys

Depending on how you access AWS and what type of AWS user you are, AWS requires different types of security credentials. For example, you need a username and password to log into the AWS Management Console, while making programmatic calls to AWS requires access keys. AWS access keys are also needed to use the AWS Command Line Interface or AWS Tools for PowerShell.

When you generate a long-term access key, you get a pair of strings: the access key ID (for example, AKIAIOSFODNN7EXAMPLE) and the secret access key (for example, wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY). The secret access key can only be downloaded at the time you create it, so if you forget to download it or you lose it later, you’ll need to generate a new one. Access key IDs starting with AKIA are long-term access keys for an IAM user or an AWS account root user. Access key IDs that begin with ASIA are temporary credential access keys that you generate using AWS STS transactions. See the AWS docs for more information about AWS credentials.

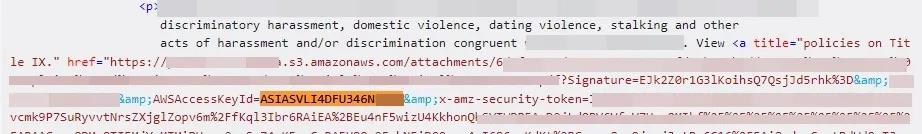

Our test identified 108 sites exposing a total of 212 AWS access keys, which means that 1.08% of the world’s biggest websites expose their AWS keys in web responses. While most of the sensitive data was encountered in HTML responses, some was also exposed via JavaScript and JSON files. Here are a few AWS keys from our results:

Google Cloud API keys

A Google Cloud API key is a string that identifies a Google Cloud project for quotas, billing, and monitoring. After generating a project API key in the Google Cloud console, developers embed the key in every call to the API via a query parameter or request header. The API is primarily used for a paid service to embed the Google Maps database, search it, and use it in third-party apps. With some security restrictions to limit unauthorized use (which could potentially rack up someone else’s bill), you could say that it is normal for these keys to be exposed if they are only valid for Google Maps. The problem is that the same key is also used for other paid services.

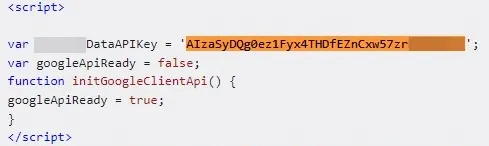

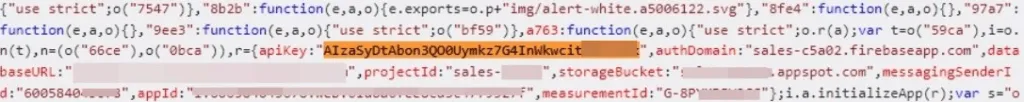

Google Cloud API keys start with AIza followed by 35 characters. For JavaScript and HTML responses, they can usually be detected using the apiKey="AIza..." signature. In total, we detected 171 Google Cloud data keys on 158 different websites, with 95 responses being HTML, 75 JavaScript, and 1 JSON. Here are some examples of Google Cloud API keys:

Other commonly exposed keys and tokens

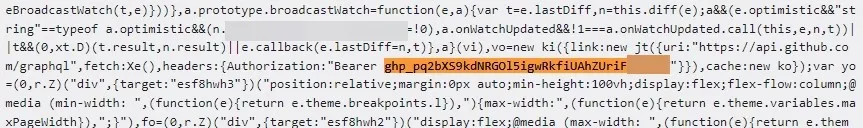

While we counted a total of 20 different types of sensitive data, AWS and Google Cloud keys were by far the most numerous provider-specific tokens. Here are a few examples of various tokens we found for other services:

GitHub personal access token

AWS AppSync GraphQL key

Google OAuth access token

Analyzing the data and response types

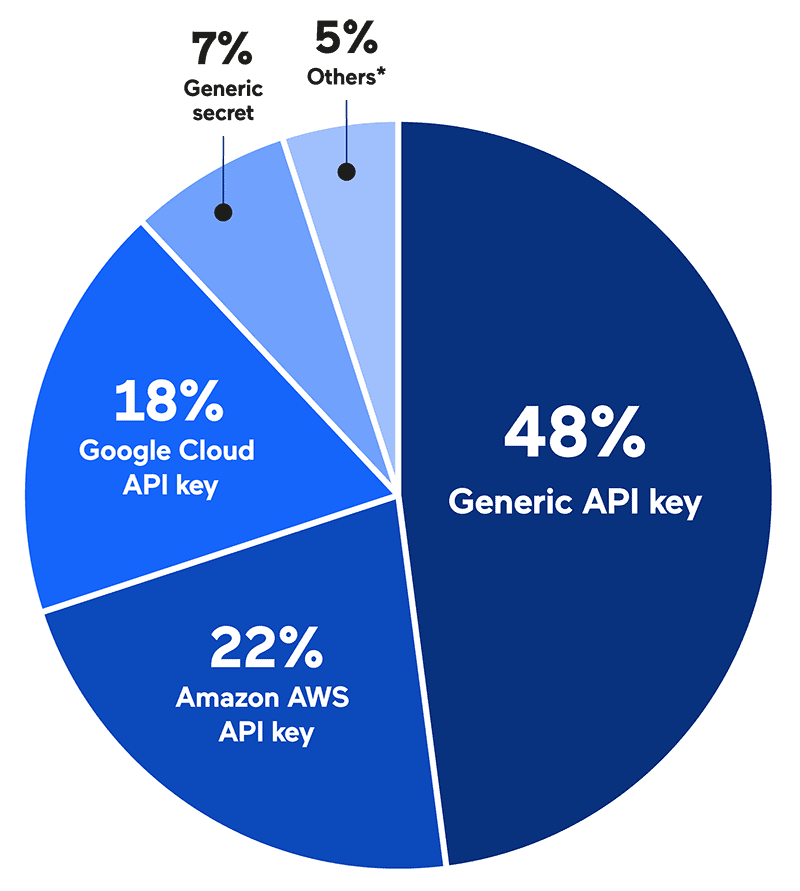

Looking at data from the Alexa top 10,000 sites, we identified 949 cases of possible sensitive data disclosure across 595 different websites. Nearly half of these were not provider-specific but what we call generic API keys – basically any tokens with the name apiKey (with variations). Exposed tokens that had secret in the name were classified as generic secrets.

Types of sensitive data exposed by Alexa top 10,000 websites

*Others: MailChimp API key, AWS AppSync GraphQL key, Facebook app secret, Slack Webhook, Amazon AWS secret key, Twitter access token secret, Github Private, Facebook App ID, Facebook OAuth, Nexmo Secret, Google OAuth access token, Symfony application secret, Sentry auth token

In terms of response types, over three-quarters of sensitive data strings (753) were exposed in HTML responses. Nearly all the others were revealed in JavaScript code, though a handful were also returned in JSON responses.

Types of responses exposing sensitive data from

Alexa top 10,000 websites

Examples of sensitive data exposed on Pastebin

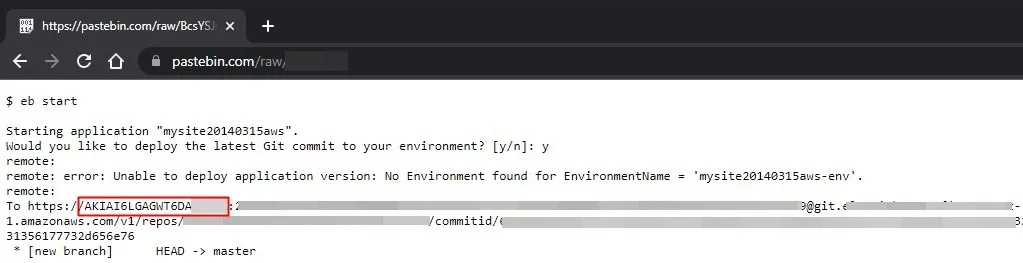

It might seem that data sent to sites such as Pastebin is limited to one-time use and only accessible through a random URL. In fact, it is publicly accessible and can be scraped automatically for analysis, so it’s important to avoid entering any sensitive data into such platforms. As mentioned earlier, we retrieved archived Pastebin pastes and analyzed them in a local test environment. Just like the live site data, the pastes also included some sensitive data, including AWS access key IDs and database information:

AWS access key ID exposed on Pastebin

Google Cloud API key exposed on Pastebin

Another Google Cloud API key from Pastebin

So I exposed an access token – what’s the worst that could happen?

When a secret is exposed, the biggest threat factor is what can be done with that specific secret. For a real-world example showing what could happen, I chose myself as the victim. Imagine I created a personal access token (PAT) on GitHub and then accidentally exposed this token by forgetting to mask it when I took a screenshot for a blog post:

I could also expose my PAT by including it in a commit to a public GitHub repo or in any number of other ways. Once you have my token, you can discover my private repository with just a few lines of Python code (if you’re not sure how to do that, GitHub Copilot can give you a hand – just be careful not to trust it too much):

import requeststoken = "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"headers = {'Authorization': 'token ' + token}repos = requests.get('https://api.github.com/user/repos', headers=headers)

A quick look at the response from the GitHub API reveals details of my private repo:

"id": 575484076, "node_id": "R_kgDOIk0wrA", "name": "private-repo-secrets", "full_name": "kadirarslan-sensitive/private-repo-secrets", "private": true, "owner": { "login": "kadirarslan-sensitive",

And now you’re only a few lines away from cloning my private repo and all the top-secret proprietary code it contains:

from git import RepoHTTPS_REMOTE_URL = 'https://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:x-oauth-basic@github.com/kadirarslan-sensitive/private-repo-secrets'DEST_NAME = 'cloned-private-project'cloned_repo = Repo.clone_from(HTTPS_REMOTE_URL, DEST_NAME)

See if you can find any other secrets in there...

Writing a custom check to scan your applications for sensitive data exposure

Fortunately, the techniques we used in our research to uncover sensitive data in public sources can also be used to protect your own data and applications. If you know you have applications that use a specific type of confidential token, you can write a custom security check to search for sensitive data exposure in production or even before each release (when integrated into your CI/CD pipeline). Let’s see how you can use Invicti’s Custom Security Checks via Scripting feature to create a script that finds your secret tokens.

Defining the data pattern and regular expression

For this scenario, let’s assume your application uses a product key that you want to keep secret. Each product key is a unique string that starts with prodKey followed by a single digit surrounded by underscores and ends with 24 random alphanumeric characters, for example:

prodKey_1_SUP3RS3CR3TTH1NKT0D3T3CT

Whenever the secret follows a specific pattern, we can easily detect it with Invicti. First of all, we need a regular expression that matches the pattern, which in this case would be:

/(prodKey\_[0-9]{1}\_[a-zA-Z0-9]{24})/gm

Breaking this down, the regex matches:

- the string literal

prodKey(case sensitive), - the character

_at position 8, - a single digit from 0 to 9 (inclusive),

- the character

_at position 10, - a string of 24 characters (a–z, A–Z, or 0–9).

Creating a passive security check in Invicti

Now that we have the regular expression, we are ready to write a custom security check script in JavaScript. Since we are only analyzing responses, a passive security check will be sufficient:

function analyze(context, response) { // Calculate the entropy for a matched value function entropy(str) { const len = str.length // Build a frequency map from the string const frequencies = Array.from(str) .reduce((freq, c) => (freq[c] = (freq[c] || 0) + 1) && freq, {}) return Object.keys(frequencies).map(e => frequencies[e]) .reduce((sum, f) => sum - f / len * Math.log2(f / len), 0) } // Regex for the secret token pattern var secretRegex = /(prodKey\_[0-9]{1}\_[a-zA-Z0-9]{24})/gm; isMatch = response.body.match(secretRegex); // Report a vulnerability if the regex matches and the entropy value is above the threshold if (isMatch && entropy(isMatch[0]) >= 3) { // Build and return an Invicti vulnerability object using a unique GUID var vuln = new Vulnerability("<YOUR_GUID_HERE>"); vuln.CustomFields.Add("Sensitive Data Type", String("Secret Key for your application")); vuln.CustomFields.Add("Sensitive Data", String(isMatch[0])); vuln.CustomFields.Add("Entropy", String(entropy(isMatch[0]))); return vuln; } }

When you include this custom script in your security checks and run a scan, Invicti will passively analyze responses to crawler requests and report any occurrences of strings that match your product key. Again, because this is a passive check, finding sensitive data like this does not involve sending any special requests or payloads. To learn more about creating custom security checks like this, see our support page on custom security checks via scripting.

Conclusion

As a result of this research, we have demonstrated that sensitive data exposure happens on all kinds of websites big and small, including 6.3% of the world's most visited sites. If such data is found and used by malicious actors, they could access your internal environments, repositories, or billable services, depending on the type of secret. Apart from the risk of further data exposures and attack escalations, you might even suffer financially if paid services or resources are abused.

In most cases, sensitive information is exposed as a result of carelessness and insufficient safeguards in the SDLC process. As a security best practice, your security testing performed before any update to a production environment (especially for public-facing websites) should include checks for sensitive data disclosure. You can easily run dedicated checks from your dynamic application security testing (DAST) solution, as shown above with the custom script for Invicti, and open-source tools such as Trufflehog are also available to sniff out any sensitive data you might be exposing.