The Dangers of Outdated Web Technologies

In the web world, using outdated technologies is the rule rather than the exception. Let’s see why that is, what risks it brings, and what you can do to take control of web technology versions in your organization.

Why Outdated Technologies Remain In Use

Back in January, Cloudflare published an analysis that confirmed anecdotal evidence: JavaScript libraries are hardly ever updated after they are deployed. In the web world, using outdated technologies is the rule rather than the exception.

Before we talk about the risks and remedies, let’s take a step back and think why so many websites and applications use outdated technologies. The issue is most noticeable with JavaScript libraries but applies all across the web technology landscape. There are many reasons for this, so let’s try to get them down to the top 5.

Reason #1: Rapid Development

At the risk of stating the obvious, web technologies evolve at a breakneck pace, so it is rarely possible to always be on the latest version of everything. Even during initial application development, a framework or library might go through several upgrades, resulting in a brand new application that already has outdated dependencies. Of course, new releases may simply introduce features that are not needed in an existing application, presenting no motivation to upgrade.

The flip side of rapid development is unpredictability. Web application development is heavily reliant on open-source libraries and packages. These vary widely in maturity and development resources, and it’s not uncommon for a one-man hobby project to become a major dependency used by thousands of applications. If the project is then abandoned without warning, all these applications will be stuck with an old version simply because the library is no longer maintained.

Reason #2: Abandoned Websites

Spinning up a new website that uses all the latest templates and visual trends can be a matter of minutes. For example, organizations often create whole websites and applications for major marketing and advertising initiatives. Many such websites are launch-and-forget – once the marketing campaign is over, they are left to their fate.

Such ghost websites can remain among an organization’s web assets for years with nobody to maintain and update them, so they obviously remain at a technology level from the time they were created. The same goes for older versions of a website that were left online after a new version was launched and are still wide open to anyone with the right URL.

Reason #3: Legacy Compatibility

Continuing the theme of rapid development, major new releases of popular technologies may be redesigns that partly or completely break compatibility. In such cases, it is customary to develop the new and legacy branches in parallel for some time. New projects are encouraged to use the new branch and existing applications can safely stay on the legacy branch. Legacy technologies are often maintained or even developed for many years, so legacy applications keep working and nobody has any motivation to risk an upgrade or rewrite.

Reason #4: Local Dependencies

The usual way to include dependencies is by linking to an online resource, but a more traditional approach is to simply put files directly on the web server. This can be done for offline development on a local machine, to decrease load times over slow links, or simply for convenience. If this setup is then directly copied to a production site, updating a dependency in the future will require more work than just changing a URL, so outdated versions are even more likely.

Another reason to put dependencies in local files might be that a library or version is no longer available online. In these cases, putting the last working version directly on the server is a quick fix that may linger on for years.

Reason #5: Dependency Avalanches

With JavaScript libraries and frameworks, one update might require updating many other dependencies. Node.js, for instance, is notorious for large numbers of dependencies, where one automatic update using the npm package manager may suddenly break an application because of unexpected changes to multiple modules. Doing all this manually is safer but tedious, so developers may eventually grow wary of updates and avoid them unless necessary.

The Risks of Using Outdated Technologies

With websites and applications that are not actively developed, inertia and cost favor the “if it ain’t broke, don’t fix it” approach. This is especially true of legacy internal applications that are still business-critical but can’t be updated for fear of breaking them (or simply because everything they use is long out of support). While sometimes using older technology versions is not an issue, in other cases outdated technologies can introduce serious risks.

Risk #1: Vulnerabilities

The biggest security risk comes from attacks on newly discovered vulnerabilities. Especially for widely-used frameworks, libraries, and content management systems, any vulnerabilities are exploited on a global scale, often within days. For example, the 2017 Equifax data breach was caused by an unpatched vulnerability in the Apache Struts framework. Even though a patch was already available in March, it still hadn’t been applied by May, when the data breach occurred.

Risk #2: Increased Attack Surface

All technologies, whether outdated or not, increase an organization’s overall attack surface, so it’s a good idea to identify and remove unused technologies. Every version of a library, framework, or language that is deployed within the organization makes a separate target for attackers. This is especially true for abandoned assets that are likely to be out-of-date.

Risk #3: Cost of Maintenance

Having multiple technologies in an organization adds to the complexity and cost of maintenance. New technologies appear so often that, especially for greenfield projects, it can be tempting to go all-in and deploy a whole new stack. This might simplify maintenance for new projects but at the cost of adding another technology in the organization and increasing the overall complexity.

Risk #4: Staying Behind

Apart from bug fixes and performance improvements, new releases often bring new functionality. Organizations that stick with older versions for fear of breaking existing code might be missing out on features that could provide them with a competitive advantage.

There is also the matter of style and appearance. Trends in web design and user experience change quickly and websites built on old technologies can look dated and unappealing after a few years. Sometimes a style refresh is enough, but moving from, say, a traditional frame-based layout to a modern single-page application will often require bringing in new technologies.

How Invicti’s Technologies Feature Can Help

To help you identify and manage the technologies used in your organization, Invicti Enterprise provides the Technologies feature. During each scan, Invicti identifies and records all the web technologies it encounters. By enabling the Technologies feature, you can see what you use and what you no longer need, along with details and recommendations for each technology.

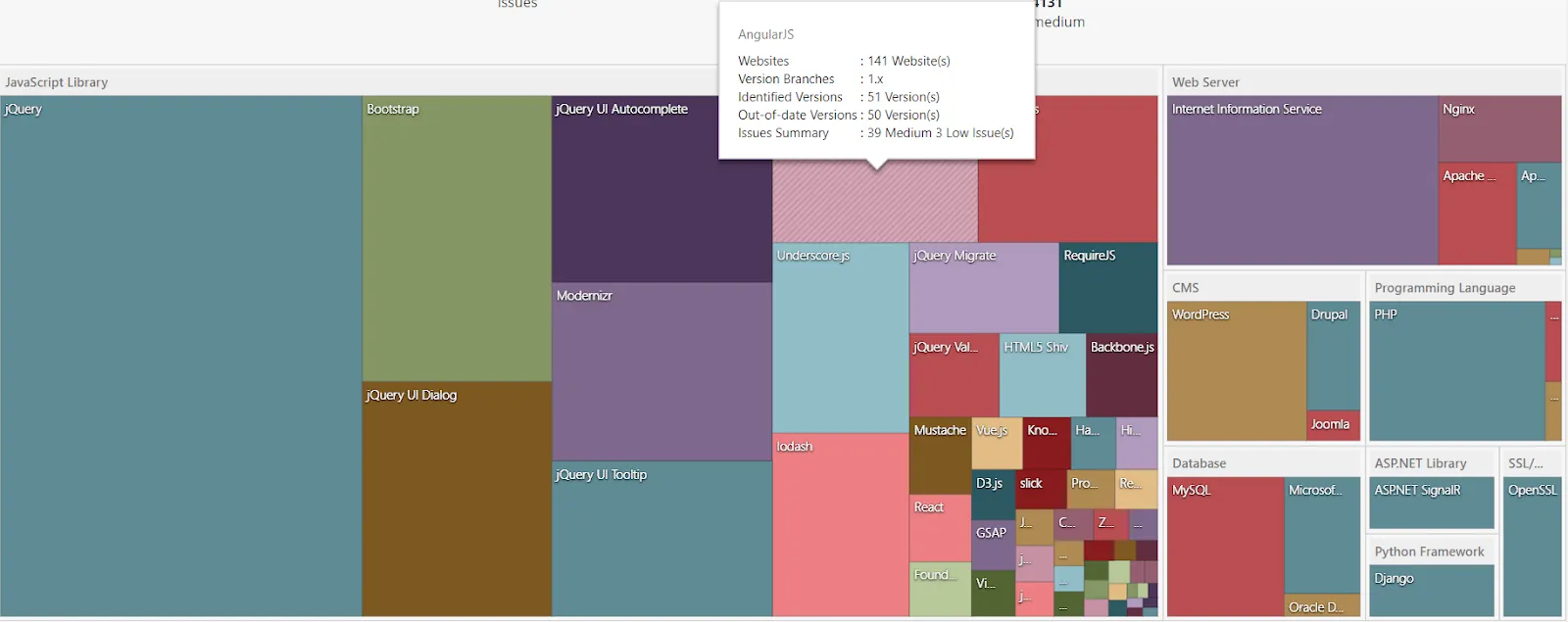

Following a scan, you can go to the Technologies dashboard for an instant overview of the identified technologies. The dashboard shows an area chart with the relative numbers of technology deployments in your organization. This gives a quick insight into the overall technology situation. You can click each item for details.

For each technology, Invicti shows information about the number of active, unused, and outdated deployments. This information is tracked across consecutive scans, so you can see what updates have been deployed since the last scan. You can also get notifications about identified outdated versions and technology updates, as well as browse technology reports.

For a detailed description of all available details and features, see the Technologies feature support page.